Calculating the shortest path between two nodes in large graphs can be a time-consuming process. One approach to solving this is to calculate an estimated path. There are many situations where the exact path isn’t necessary; an estimated path is good enough. This is the case especially if the performance benefits of estimating are high enough. I’ll look at implementing the algorithm from A Sublinear Algorithm for Approximate Shortest Paths in Large Networks in F#. It offers a hefty performance boost at the cost of some preprocessing; well worth the time.

To set the stage, imagine a large, highly-connected graph with millions of nodes. Common examples are a social graphs and networking graphs. What is the fastest way to find the shortest path between two nodes? One method is to use breadth first search (BFS) from the starting node, expanding its search until the target node is found. In a similar, but faster fashion, there is a BiBFS (a bidirectional search simultaneously from the source and target nodes, and stopping when the searches meet). Another option is to preprocess the graph and store an index the nodes and shortest paths. As you can imagine, indexing a large graph isn’t particularly practical. A common solution to this is only index a portion of the nodes. That’s what will be investigated today.

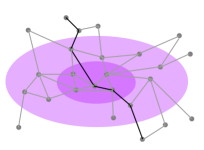

The paper describes, what they call the Wormhole algorithm. It is a method of dynamically selecting a core group of nodes to be indexed. This method takes advantage of the characteristic of highly connected graphs. As a preprocessing step, the core nodes are selected and indexed. Once this is done searches use a modified BiBFS. A path can still be found directly from source to target. But a path can also be found when both searches come in contact with the core group of nodes. At this point, the search can look up the indexed result of part of the path to improved the performance, while accepting that the ultimate path will now be an estimate, not necessarily the fastest path.

Before getting into the details of the algorithm, there is a bit of structure to define.

1 | type Graph = { |

A bit of preparation is required. The core group of nodes must be selected and indexed. First, to select a core group of nodes a random node is selected from the graph and placed into what is called the “inner ring”. It’s neighbors are then placed in the “outer ring”. The algorithm then processes all nodes in the outer ring in the similar way. One difference is nodes from the outer ring are not selected randomly. Since the goal is to leverage high connectivity, the node in the outer ring that has the most connections to nodes in the inner ring is selected. The selected node is placed in the inner ring. Then its neighbors are added to the outer ring. This process repeats until the size of the inner ring meets the desired size. At this point the processing is complete. The inner and outer rings are combined into a core graph. The result is a highly connected group of core nodes to be used when performing partial path estimation. This is what will be used moving forward.

1 | /// Create an inner and outer ring of most connected verticies |

Next, the core graph must be indexed. The index is a mapping of every node pair combination and the shortest path between them (start, target) -> path. Although computationally intensive, it is a much smaller graph, making it viable. This is also where we get the performance boost. Once path finding hits the core group of nodes, a fast index lookup can be done instead of needing to calculate the shortest path on the fly.

1 | /// Index a graph |

Creating the core graph along with its index looks like this. For this test I’ve selected an s of 600, which means the inner ring needs to get to a size of 600. This value is really dependent on the properties of the graph in question. The size and connectedness of the graph will impact performance. For a specific graph some experimentation may be required. But once determined, this process only needs to be run once. It can then be saved and used for pathfinding later.

1 | let s = 600 |

Now that the core graph has been created, the next step is to look at what searching looks like. Before I get to the BiBFS code, I have a supporting enqueue that conditionally adds a node to a queue based on whether the current search has found a path to the core graph yet.

1 | let inline enqueue (queue: Queue<string list>) (coreNodes: Map<string, bool>) (portalPath: (string list) option) (path: string list) :(string list) option = |

At a high level, the algorithm simultaneously does two BFSes, one from the start node and one from the target node. When the two searches meet, the shortest path is found. But, the interesting aspect is the integration of the core graph. The algorithm keeps track of when the search touches one of the core nodes. If both searches connect, there is no change. But, if both searches hit the core before each other, then the path will traverse through the core set of nodes. This path is looked up in the index. This is also where the performance comes from.

1 | /// bfs with wormhole algorithm |

That’s pretty much it. There is preprocessing to provide a partial index that allows for faster search results. Then there the search uses this index. So how do the results look? It should be noted any results are going to be highly dependent on the characteristics of graph as well as the size of the core indexed graph. As with anything related to performance take this with a grain of salt. But the results can be pretty impressive. Below are some sample path finding times from random nodes of a 10 million node graph. Is many cases the wormhole version is drastically faster, although it often comes at the cost of not-quite-the-shortest-path. The index can provide upwards of a 90% performance improvement, but even a nominal improvement is often seen. There is some overhead because of the extra portal node checks, so occasionally the search actually takes longer, if the index isn’t used.

1 | BiBFS Wormhole Gain Length (delta) |

There are a couple ways the provided code could be improved for performance an estimated path. But even a relatively naive implementation provides some nice benefits. Hopefully you found some value in this dive into estimated shortest path finding and selective indexing. If nothing else, it is valuable to consider that estimates can often be good enough, especially on large datasets where performance is an issue. Until next time.

References:

A Sublinear Algorithm for Approximate Shortest Paths in Large Networks (original source: https://arxiv.org/pdf/2406.08624)